Image: OpenAI

OpenAI unveiled GPT-4 today, its next-gen large language model that is the technical foundation for both ChatGPT and Microsoft’s Bing AI chatbots. It’s a profound upgrade, potentially opening the door for major advances in both the capabilities and features of today’s AI technology.

OpenAI announced the new GPT-4 upgrade on its blog this morning, and you can already try it out on ChatGPT, the AI chatbot that OpenAI has published to the web. (But only ChatGPT Plus, the paid version. It’s also capped at 100 messages per every four hours.) Microsoft confirmed, too, that its latest version of its Bing Chat tool uses GPT-4 as its underpinnings.

Why does this matter? We’ve listed several major upgrades that GPT-4 offers, beginning with its biggest: It’s not just about words any more.

Now GPT-4 can see the world with visual input

“GPT-4 can accept images as inputs and generate captions, classifications, and analyses,” according to OpenAI. Yes, that means what you think it does: ChatGPT and Bing will be able to “see” the world around them, or at least interpret visual results like image search already does.

What does this mean in the real world? Well, it means that GPT-4 will actually see the real world, as it’s already doing for an app like Be My Eyes, a tool for those with difficulty seeing. Be My Eyes takes the camera on a smartphone and visually explains what it’s seeing.

In a GPT-4 video aimed at developers, Greg Brockman, president and co-founder of OpenAI, showed how GPT-4 interpreted a sketch, transformed it into a Web site, then supplied the code for that site.

GPT-4’s longer output: Fanfiction just got epic

“GPT-4 is capable of handling over 25,000 words of text, allowing for use cases like long-form content creation, extended conversations, and document search and analysis,” according to OpenAI. As my colleague Michael Crider put it, “Pray for the Kindle self-publishing moderators.”

Why? Because ChatGPT’s output with GPT-4 will not only get longer, but it will get more creative, too: “GPT-4 is more creative and collaborative than ever before,” OpenAI said. “It can generate, edit, and iterate with users on creative and technical writing tasks, such as composing songs, writing screenplays, or learning a user’s writing style.”

Factually, though, there’s another benefit. From examples that OpenAI showed, it appears that you’ll be able to feed more web pages directly into the prompt. Yes, GPT-4 should understand the internet, but GPT-3 was trained up until 2021. It appears that you’ll be able to give GPT-4 and ChatGPT more context, if it isn’t up to the minute already.

GPT-4 is simply smarter

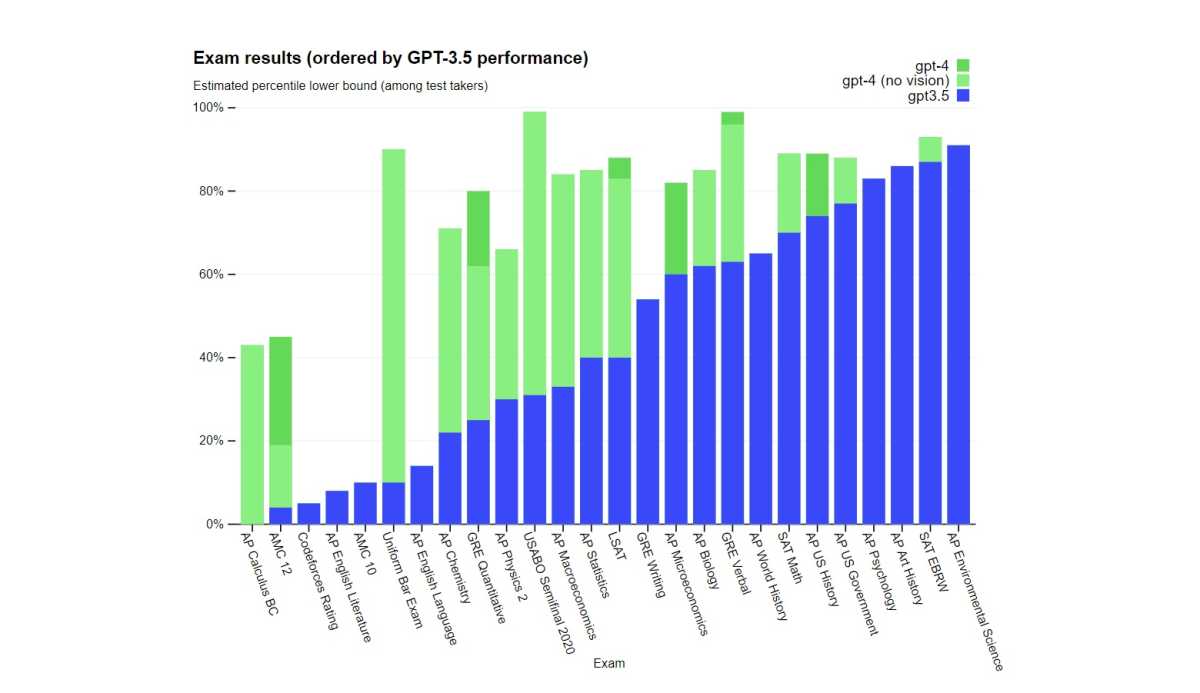

Large language models don’t natively display intelligence. But they do understand the relationships between words, and the more-sophisticated GPT-4 model will understand relationships and context even better. One example: ChatGPT scored in the 10th percentile on a uniform bar exam. GPT-4 scored in the 90th percentile. In what’s known as the Biology Olympiad, the vision-powered GPT-4 scored in the 99th percentile, while ChatGPT finished in the 31st percentile.

Duolingo is also using GPT-4, OpenAI said, improving its contextual awareness of what you’re saying and how you should be saying it.

Can it even do your taxes? Well, sort of. Brockman inputted the tax code and GPT-4 calculated a hypothetical tax liability.

GPT-4: It’s safer?

According to OpenAI, researchers “incorporated more human feedback, including feedback submitted by ChatGPT users, to improve GPT-4’s behavior.” The company also brought in 50 human experts to provide feedback in terms of AI safety.

That, of course, will have to be proven over time. But with everything else, ChatGPT and Bing got just got a lot smarter.

This story was updated at 11:27 PM PT on March 14 with additional examples.

Author: Mark Hachman, Senior Editor