Skip to content

Image: Microsoft

AI will change the way that we work. Or so says the most fervent purveyors of the tech, which now includes Microsoft. But after seeing ChatGPT, Dall-E, and other AI systems integrated into the latest versions of Windows 11, Office, and the company’s Microsoft 365 platform, I can’t say that I agree.

Make no mistake, Microsoft is pushing new tools like its Copilot system hard, integrating it into systems that are staples for the company and hundreds of millions of users. But speaking as a power user — and accepting the limited perspective that gives me for many who are not — I can’t see these new tools being anything more than an occasional curiosity.

They won’t change the way that I work, and I think even those who stand to benefit the most from them will be hesitant to try.

It works, until it doesn’t

To be sure, there are elements of the system that can be beneficial. ChatGPT-based AI text generation is dramatic, filling in pages of information in just a minute or two, far faster than the most frantic keyboard jockey could manage. Image generation is just as impressive, spitting out incredibly detailed and photorealistic images with just a few lines of text prompt. If you’re not a natural writer, and you can’t navigate your way around Paint, this stuff seems like Arthur C. Clarke’s classic technology “indistinguishable from magic.”

Until it doesn’t. The term “Artificial Intelligence” brings science fiction staples like Star Trek’s Commander Data to mind. But this is a misnomer, and frankly, I think it’s a deliberately misleading one. Even the most impressive things that AI spits out in its current form is based on pre-existing algorithms. Incredibly and near-incomprehensively complex algorithms, but algorithms nonetheless. And they follow a law of computing that hasn’t changed in the better part of a century: they can’t do anything they haven’t been designed, or told, to do.

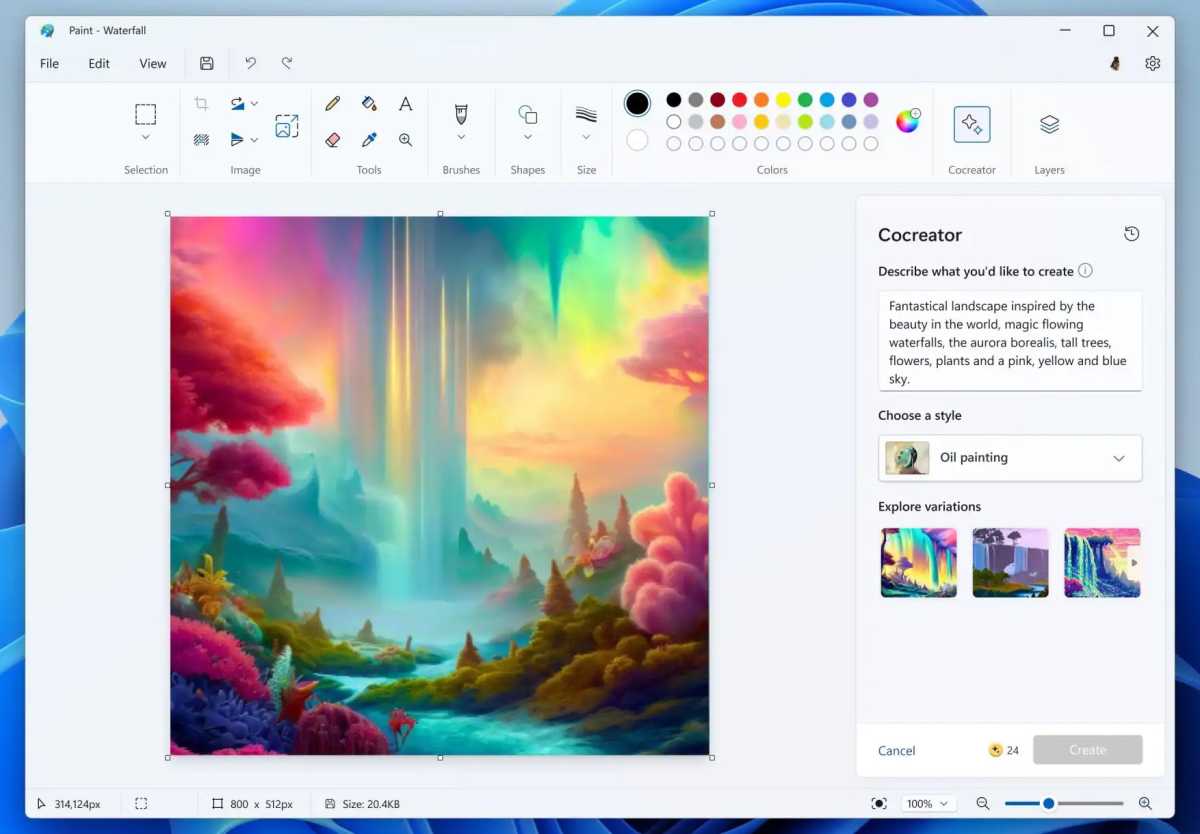

I’ll use the new AI-infused Paint as an example. At Microsoft’s fall Surface event in New York, I was shown Paint automatically distinguishing between the foreground and background, effortlessly blurring the beach in a photo of a dog running across it. Impressive, and useful for those who aren’t acclimated to Photoshop’s tools (or don’t want to pay for them). But these are tricks mobile apps have been doing for years, which don’t require the massive resources of a remote data center and an always-on connection.

Something that does require that “big iron” power is image generation. A photo of a large ornate building was separated from the sky in just a few clicks, with the new layering tools allowing the demonstrator to put in anything I asked for behind it. I asked for a tornado, and it delivered a Dall-E-generated image of a twister right out of a Texas tall tale.

Microsoft

But this was a separate image from the building, on a separate layer, not using the building as a reference at all. I could place it behind the building, squishing together two completely different images with lighting and perspective that didn’t match up. What the new AI-infused Paint can’t do, and what I suspect Microsoft would like you to imagine that it can, is place that tornado in the existing image of the building as if it was an effect from a movie or a professional marketing studio.

The limits the AI image generation tools are hitting are the kind of thing even an intermediate user could learn to do with half an hour of tutelage on YouTube and an image search. What does that do for you? It saves you about half an hour, at best. For a result that looks like, well, half an hour’s work in Paint. As someone who uses graphic design tools daily, it didn’t inspire any immediate fears of human obsolescence.

Sifting through the Word salad

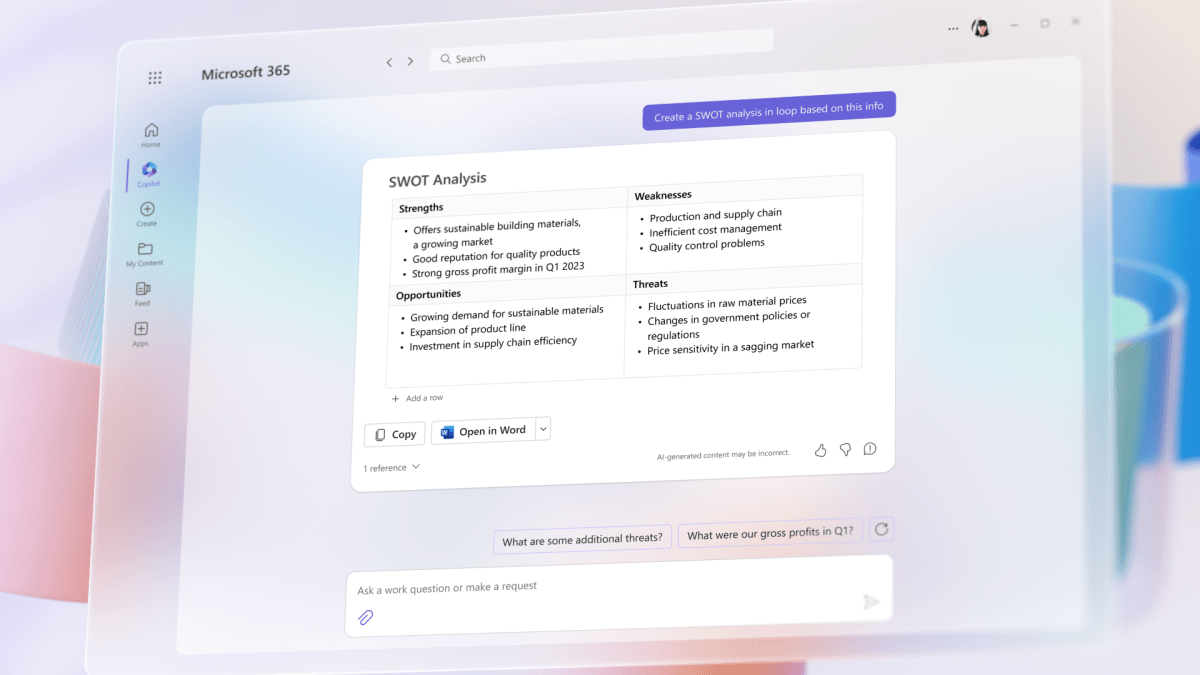

Speaking of which: text generation. ChatGPT’s ability to gobble up and spit out huge amounts of textual info is, indeed, impressive. But when you want to do more nuanced things with that text — things that humans need a lot of time and attention to do — it falters. A demonstrator took a 5000-word written document and told Copilot to summarize it in Word. This brought the count down to a little over 2000 words…which is still more than you’d want to read in front of a full table of your coworkers or across a Zoom screen.

When I asked him to chop it down to under 1000 words, the system choked. There was simply too much information for it to compress without losing the essentials. A human could do it, even if you needed someone who’s skilled enough to sift the croutons of actionable and immediate information from the word salad. Copilot couldn’t, giving near-identical, non-requested results several times.

And after several minutes of a Microsoft Azure server thinking to itself, the demonstrator noted that you’d still need to read through the resulting wall of text to check that it’s accurate. Or perhaps more distressingly, that the system didn’t wholly invent information that wasn’t there.

An Outlook demonstration did just that with an auto-generated reply of a few hundred words, inserting ideas that the human operator never had. Ideas which might have gotten him in trouble if he claimed they were his own, and which he didn’t remember later. We’ve seen this kind of thing happen already for people eager to replace their online selves with AI-powered facsimiles.

So how much work is this actually saving, if the system’s limits are well below that of a human operator and its output still needs to be checked by hand and eye? Not enough for a manager to confidently chop headcount, which I suspect is the outcome many executives were hoping for. And that’s in the best-case scenario, when the system worked without returning an error from the remote server. That happened more than once, and at least once at every demo I was shown.

Copilot’s ability to save time is remarkable in highly specific situations. For example, working with that same 5000-word document, the demonstrator was able to generate a 20-slide PowerPoint deck with relevant bullet points. It even had fairly attractive formatting, complete with non-copyrighted image backdrops that were unspecific, but broke up the black-and-white slides nicely. The system could insert auto-generated images, license-free images from a Bing search, or images saved in a local folder.

That’s a time saver. In the span of a few minutes the system generated a PowerPoint presentation that would have taken an expert around an hour to put together. But again, the result would require almost as much time to check manually…and its results weren’t entirely reliable or repeatable. And a system that can’t be consistently relied upon isn’t one that can replace a human worker, even a low-level one, though it might augment your work on occasion.

Remembering the lessons of Windows 8…or not

Which brings up another point: Why is Microsoft demonstrating all this new technology, and pushing it so quickly into its corporate tools, when it’s clearly missing so much functionality? The closest comparison that comes to mind is the quick shift to touch-based interfaces in Windows 8, anticipating a world full of people working on touch-primary tablets in the wake of the iPad’s launch.

A world that, a decade later, doesn’t seem to have arrived. We’re back to the old Start button and menu, as gently evolved as it might be, and work in Windows is still primarily driven by mouse and keyboard. Even its impressive support for touch is, largely, replicating existing tools like cursors and scroll wheels. That mobile-inspired revolution never came.

So after Microsoft learned from that experience and walked much of its concepts back in Windows 10, why is it so eager to leap off yet another uncertain technological precipice? I can’t say with certainty. But if I were to put on my tech analyst hat — a big, floppy, somewhat ridiculous hat, worn in the hopes that no one confuses what I’m about to say for investment advice — I’d put the blame on the a lot of hot air blowing around in the tech market.

Microsoft

I suspect that investors, eager to get on the AI trend and hoping that its most fantastical promises come to fruition, have caught the ear of Microsoft’s executive team. Those promises include replacing a huge portion of jobs filled by squishy, entitled humans, demanding such unreasonable expenses as office space and health insurance benefits. Even a small amount of low-level workers replaced by an Azure cloud would represent a huge savings, and a huge potential profit for Microsoft if it can get the monetization right.

Microsoft, its investors, or some combination of both may be experiencing a Fear Of Missing Out, motivated by AI tools of questionable utility cropping up at more or less every competitor. Perhaps it has more applications at the enterprise level, where huge, number-crunching systems that have been in place for decades might benefit from a new generation of algorithmic processing. But here at user level, the big change — and the big dread — is AI replacing low- and mid-level human workers.

We’re already seeing attempts at this. And so far they’ve resulted in problems so predictable, ChatGPT itself could have told you they were coming. As impressive as these tools are becoming, and as much as they’ll be refined in the future, I can’t see them effectively replacing tons of human writers, analysts, artists, et cetera.

Not that people won’t try — especially people who hold the purse strings. It’s going to come down to how much of a reduction in quality those making the final call are willing to tolerate in order to save money on employees. And how expensive those results will be to produce, even if they’re inevitably cheaper than using squishy humans. Azure data centers don’t grow on trees, and neither do terawatts of power needed to run them.

Full speed ahead, wherever we’re going

Microsoft executives are well aware of all these issues, to a far more nuanced degree than you and I. But I think someone’s told them to go full speed ahead on AI, and damn the torpedoes of actual functionality and results. The push is coming from the top, and a certain amount of pain at the bottom (for both Microsoft’s product team and its corporate customers) will be tolerated. At least for now.

Microsoft

We won’t see the aftermath for a couple of years at least. Will this be a Windows 8 moment, remembered mostly as a painful lesson in what not to do? Or will I be proven wrong, and will AI become an essential part of every worker’s digital worker’s toolkit…what workers remain after so many of them are replaced?

Time will tell. I hope I’ll be around to help time tell it.